I had a great time at the recent Machine Learning for Artists hack day at Bocoup. I didn’t actually accomplish much myself—I generally resorted to drawing in my sketchbook—but I learned a lot from the projects presented, and was fascinated to see my drawings applied as style transfer, a project K. Adam White and Kawandeep Virdee among others took on during the event. New drawings of mine were created without my having to do anything! It was magical.

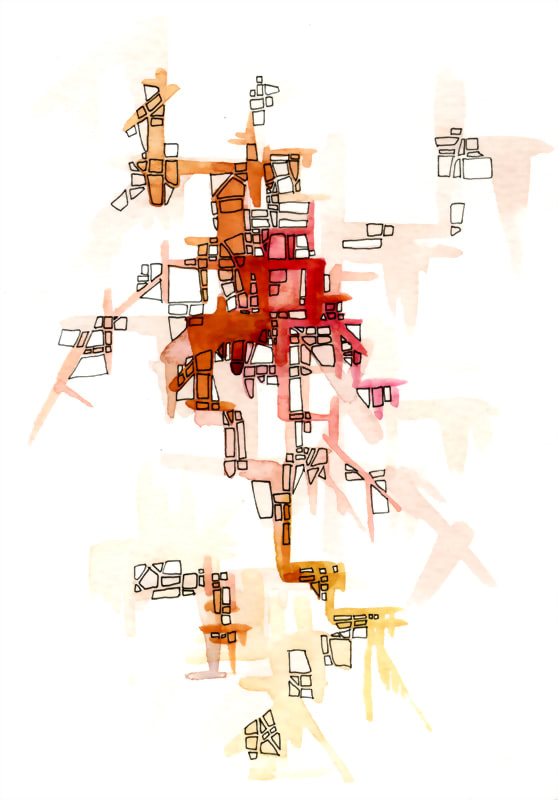

We ended up with this…

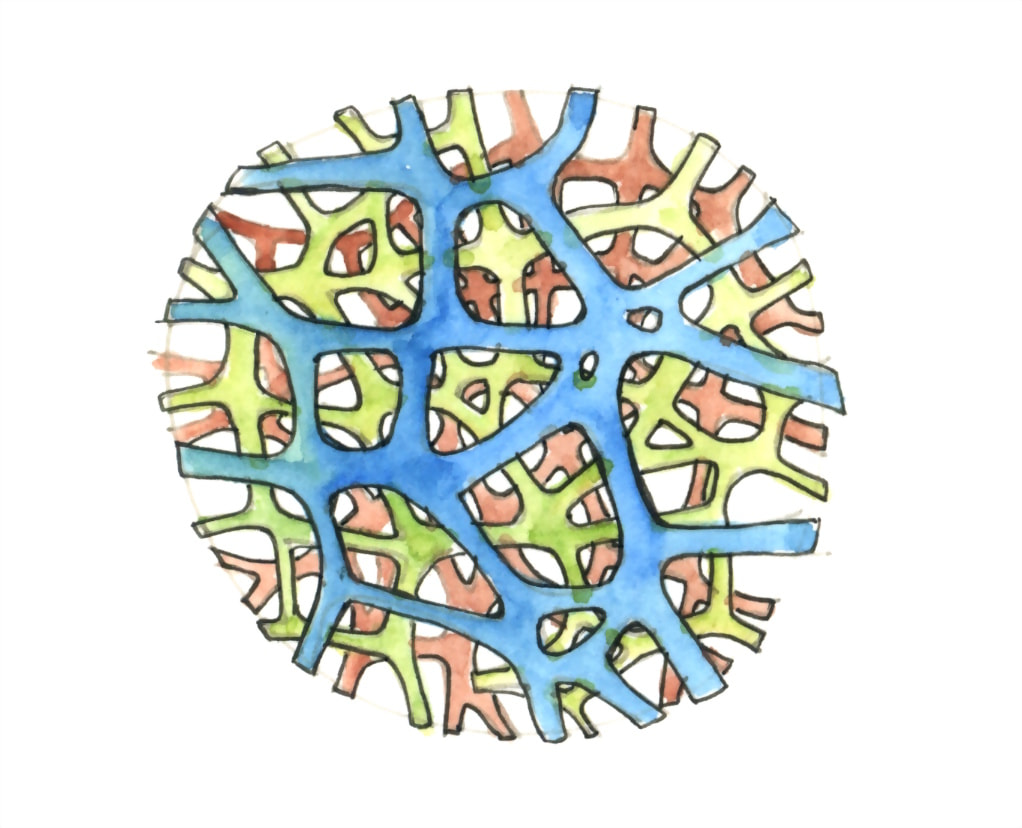

by combining these two

Style transfer is a machine learning process whereby a content image gets transformed using the style of a source image. If you’ve seen the Google Deep Dream project, with its hallucinatory puppyslugs in pop colors, it’s related to that.

Google Maps transformed by my abstract map art

More recently, the Prisma app has been super popular for Instagram posts and uses a similar technology—it gives you a range of artworks that you can use to transform the style of your photos. When I gave it an image of my map art, it even added extra roads to it:

Using this local style transfer process is like being able to transform a photo Prisma-style into ANY type of artwork. So of course I got mappified :)

I’m excited to see what else can be done with this process, and especially if it’ll affect my drawing in any way. I’m already thinking about doing a fractal drawing process where I make progressively bigger drawings with the aid of these machine-hallucinated map details. Stay tuned!